ISSAI aims to develop a national capacity for research in Artificial Intelligence incorporating the experience of exemplars from Asia, Europe and North America.

Institute of Smart Systems and Artificial Intelligence (ISSAI) was founded in September 2019 to serve as the driver of research and innovation in the digital sphere of Kazakhstan with the focus on AI research.

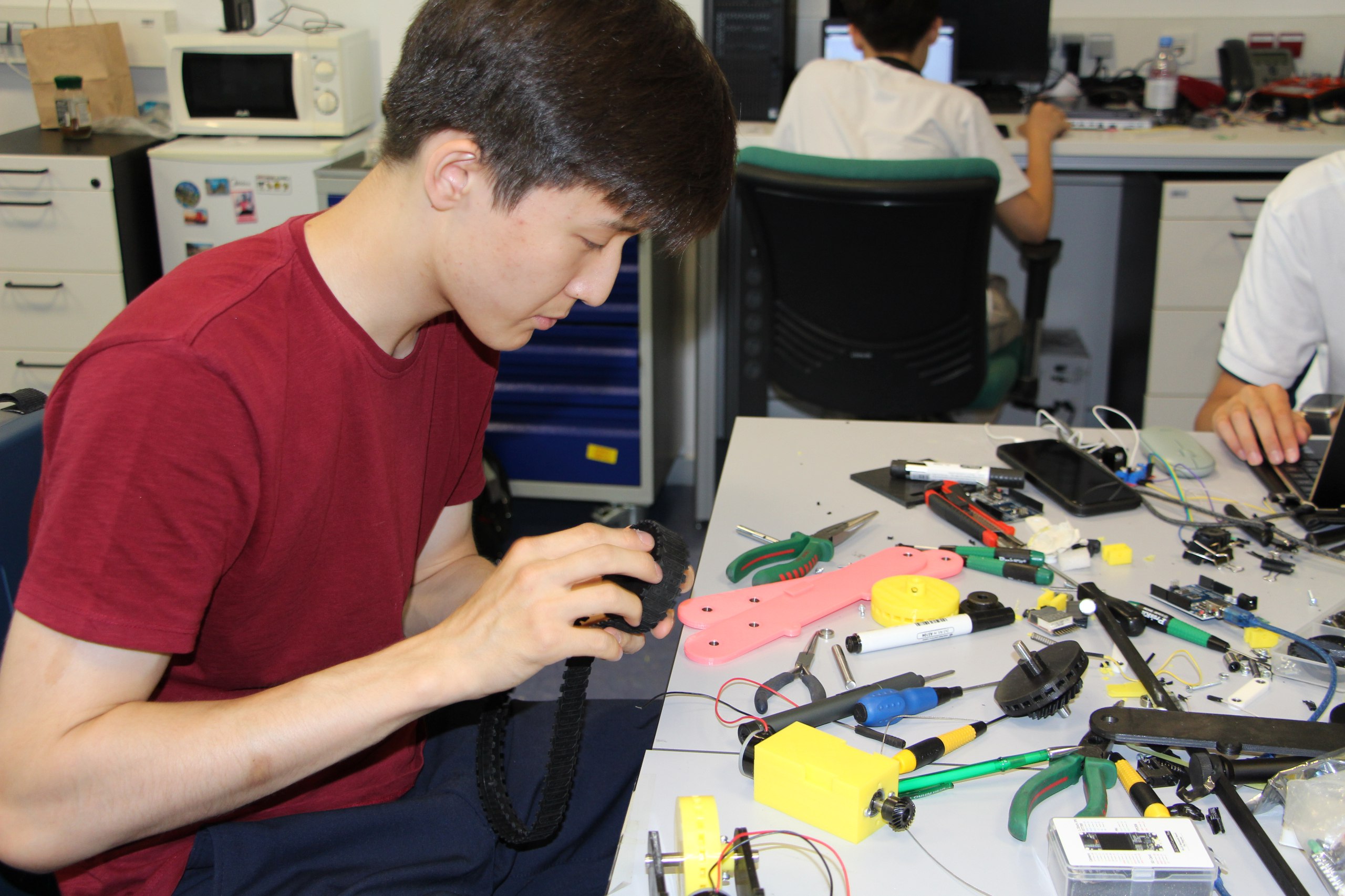

Located at the C4 Research Building of Nazarbayev University, ISSAI conducts interdisciplinary research on machine intelligence for solving real-world problems of industry and society.